Last update on: 9:15 am April 26, 2024 by fashionabc

Sometimes the best way to solve a complex problem is to take a page from a children’s book. That’s the lesson tech giant Microsoft’s researchers learned by figuring out how to pack punch into a small package. We’re referring to the launch of Microsoft’s AI model Phi-3 Mini which measures 3.8 billion parameters, and is now available on Azure, Hugging Face and Ollama. The launch doesn’t come as a surprise as Microsoft has been amongst the frontrunners in the AI space, be it investing heavily in OpenAI or rolling out its own AI chatbot tool. The newest launch ofPhi-3 Mini reflects the company’s strategy to cater to a broader clientele by offering affordable options in the rapidly evolving space of AI technology.

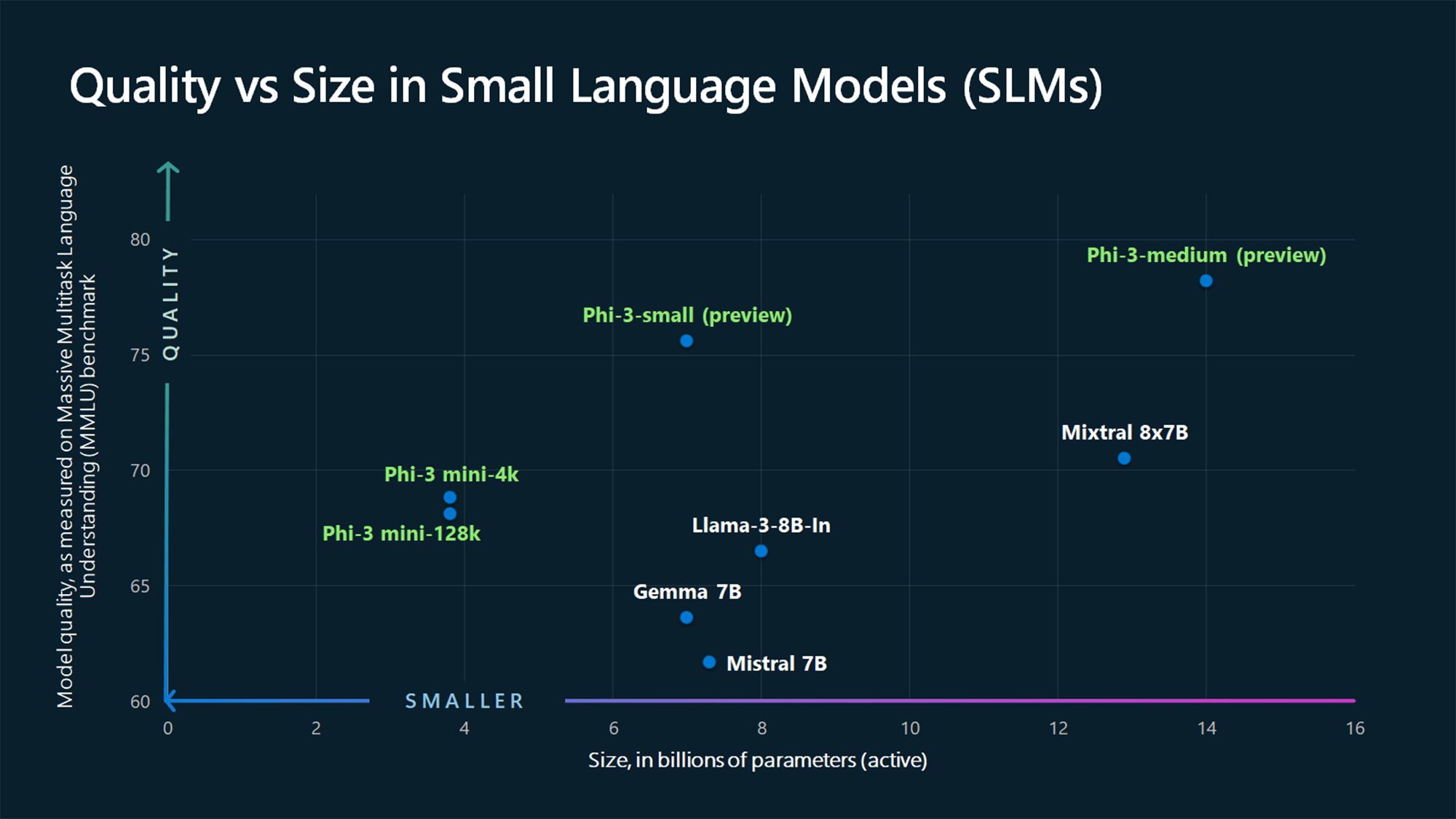

Graphic illustrating how the quality of new Phi-3 models compares to other models of similar size. (Image courtesy of Microsoft)

The inspiration behind the launch of this new version is quite interesting. Last year, after spending the day thinking through potential solutions to machine learning riddles, Microsoft’s Ronen Eldan was reading bedtime stories to his daughter when he thought to himself, “how did she learn this word? How does she know how to connect these words?” That led him to wonder how much an AI model could learn using only words a four-year-old could understand – and subsequently to an innovative training approach that’s produced a new class of more capable small language models that promises to make AI accessible to a larger demographic.

Compared to their larger counterparts, small AI models are often cheaper to run and perform better on personal devices like phones and laptops. per a company release, “Phi-3 models outperform models of the same size and next size up across a variety of benchmarks that evaluate language, coding and math capabilities, thanks to training innovations developed by Microsoft researchers.” Phi-3 simply built on what previous iterations learned. While Phi-1 focused on coding and Phi-2 began to learn to reason, Phi-3 is better at coding and reasoning. Designed to handle simpler tasks, SLMs like Phi-3-mini offer practical solutions tailored for companies operating with limited resources, which, in turn, aligns with Microsoft’s commitment to democratise AI and make it more accessible to a broader demographic of businesses.

“What we’re going to start to see is not a shift from large to small, but a shift from a singular category of models to a portfolio of models where customers get the ability to make a decision on what is the best model for their scenario,” Sonali Yadav, principal product manager for Generative AI at Microsoft, said in a company release.

Microsoft’s competitors have their own small AI models as well, most of which target simpler tasks like document summarization or coding assistance. Google’s Gemma 2B and 7B are good for simple chatbots and language-related work. Anthropic’s Claude 3 Haiku can read dense research papers with graphs and summarize them quickly, while the recently released Llama 3 8B from Meta may be used for some chatbots and for coding assistance.